Data Masking vs Tokenization in Snowflake

Data Masking vs Tokenization in Snowflake: Strategies for Sensitive Data

Organizations must protect sensitive data (PII, PHI, PCI, etc.) stored in the Snowflake Data Cloud. Two common approaches are data masking and tokenization, which both hide real data from unauthorized users but work differently.

In Snowflake, dynamic data masking uses masking policies applied at query time, while tokenization replaces data with tokens before loading and detokenizes on query via external functions.

Understanding Data Masking

Data masking involves hiding original data by substituting or obfuscating it, ensuring that sensitive information isn't exposed to unauthorized users. In Snowflake, Dynamic Data Masking (DDM) allows for policy-driven transformations of column values at query time, without altering the underlying data.

Snowflake masking supports different techniques:

- Full masking: completely hide a value (e.g. show ** for all non-authorized users).

- Partial masking: reveal only a part (e.g. mask all but last 4 digits of a credit card).

- Pseudonymized masking: replace value with a deterministic surrogate (e.g. SHA2() hash). This keeps identical inputs mapping to the same output, so data can still be aggregated or joined on masked values.

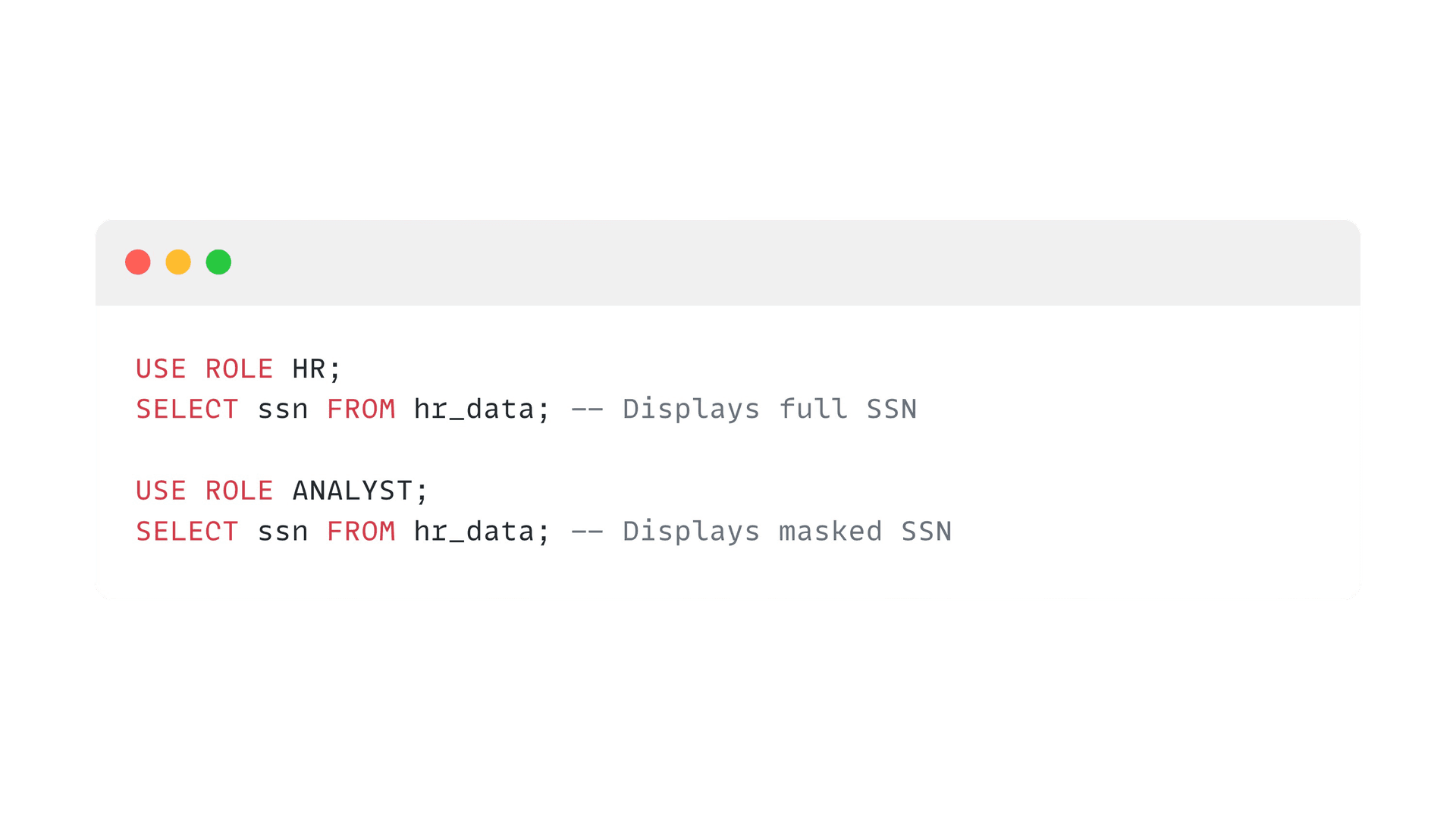

Masking policies are schema-level objects applied to table or view columns. At query time, Snowflake automatically rewrites the query and applies the policy everywhere the column appears. Whether the user sees real or masked data depends on conditions in the policy (often based on CURRENT_ROLE() or IS_ROLE_IN_SESSION())

Implementing Data Masking in Snowflake

- Create a Masking Policy: Define a policy using SQL expressions to specify how data should be masked based on user roles.

- Apply the Policy to a Column: Use ALTER TABLE to set the masking policy on specific columns.

- Test with Different Roles: Execute queries under various roles to ensure masking behaves as intended.

Use Cases for Data Masking

- Role-Based Access: Allow analysts to work with production data without exposing sensitive values. (E.g. analysts see only the last 4 of a phone number or SSN, while auditors see the full data.)

- Testing Environments: Provide realistic test data by masking PII in production tables (so testers can run queries without risk).

- Compliance: Meet regulatory requirements by ensuring only authorized roles can view sensitive data. Masking helps show compliance by demonstrating data is hidden in query results.

- Data Sharing: When sharing data externally (e.g. via Snowflake Secure Data Sharing), apply masking policies to protect sensitive fields.

Understanding Tokenization

Tokenization replaces sensitive data with non-sensitive placeholders (tokens) before storing it in Snowflake. The original data is stored securely outside Snowflake, and authorized users can retrieve it via detokenization processes.

Unlike masking, tokenization alters the data at rest. In Snowflake, tokenization is done via external tokenization: data is sent to a token service (or in-house function) that returns tokens, and Snowflake stores those tokens instead of the original values. Original data is kept in a secure token vault or key-management system. At query time, Snowflake can call an external function to detokenize values on the fly (for roles that should see real data).

Implementing Tokenization in Snowflake

- Set Up a Tokenization Service: Utilize a third-party or custom service to tokenize data before loading it into Snowflake.

- Create an External Function: Define a function in Snowflake that calls the tokenization service to detokenize data when necessary.

- Create a Masking Policy: Implement a policy that uses the external function to detokenize data for authorized roles.

- Apply the Policy to a Column: Use ALTER TABLE to set the masking policy on tokenized columns.

- Test with Different Roles: Verify that only authorized roles can view detokenized data

Use Cases for Tokenization

- Payment processing (PCI DSS): Store only tokenized credit card numbers in Snowflake. Tokens are meaningless if breached. Actual card data is fetched only when needed (e.g. refund) via detokenization.

- Identity data (HIPAA/PII): Personally Identifiable Information (SSNs, emails, phone numbers) are tokenized to minimize exposure. Analytics can still match on tokens if format-preserving, enabling joins without revealing data.

- Multi-tenant/Dev environments: Dev/test accounts get tokenized data so no sensitive info is present, while prod systems store tokens with a secure link to real data.

- Data sharing and collaboration: Shared tables contain tokens instead of real values, preventing any external party from seeing actual data. The token system owner controls detokenization.

Summary and Best Practices

Both data masking and tokenization are essential strategies for protecting sensitive data in Snowflake. Choosing the appropriate method depends on the specific use case, data sensitivity, and compliance requirements.

Best Practices:

- Least Privilege Access: Assign roles carefully to ensure users have only the necessary access to data.

- Centralized Policy Management: Manage masking policies centrally to maintain consistency and ease of administration.

- Regular Testing: Continuously test masking and tokenization implementations to ensure they function as intended.

- Integration with Token Services: Ensure synchronization between Snowflake roles and external token services to prevent access issues.

- Monitoring and Auditing: Utilize Snowflake's Access History and query logs to monitor data access and policy application.

- Format Consistency: Use format-preserving tokenization if downstream systems require specific data formats.

- Compliance Documentation: Maintain thorough documentation of data protection measures to satisfy audit and compliance requirements.

Implementing these practices will enhance data security and ensure compliance within the Snowflake environment.